Can generative AI designed for the enterprise (for example, AI that autocompletes reports, spreadsheet formulas and so on) ever be interoperable? Along with a coterie of organizations including Cloudera and Intel, the Linux Foundation — the nonprofit organization that supports and maintains a growing number of open source efforts — aims to find out.

The Linux Foundation on Tuesday announced the launch of the Open Platform for Enterprise AI (OPEA), a project to foster the development of open, multi-provider and composable (i.e. modular) generative AI systems. Under the purview of the Linux Foundation’s LF AI and Data org, which focuses on AI- and data-related platform initiatives, OPEA’s goal will be to pave the way for the release of “hardened,” “scalable” generative AI systems that “harness the best open source innovation from across the ecosystem,” LF AI and Data’s executive director, Ibrahim Haddad, said in a press release.

“OPEA will unlock new possibilities in AI by creating a detailed, composable framework that stands at the forefront of technology stacks,” Haddad said. “This initiative is a testament to our mission to drive open source innovation and collaboration within the AI and data communities under a neutral and open governance model.”

In addition to Cloudera and Intel, OPEA — one of the Linux Foundation’s Sandbox Projects, an incubator program of sorts — counts among its members enterprise heavyweights like Intel, IBM-owned Red Hat, Hugging Face, Domino Data Lab, MariaDB and VMware.

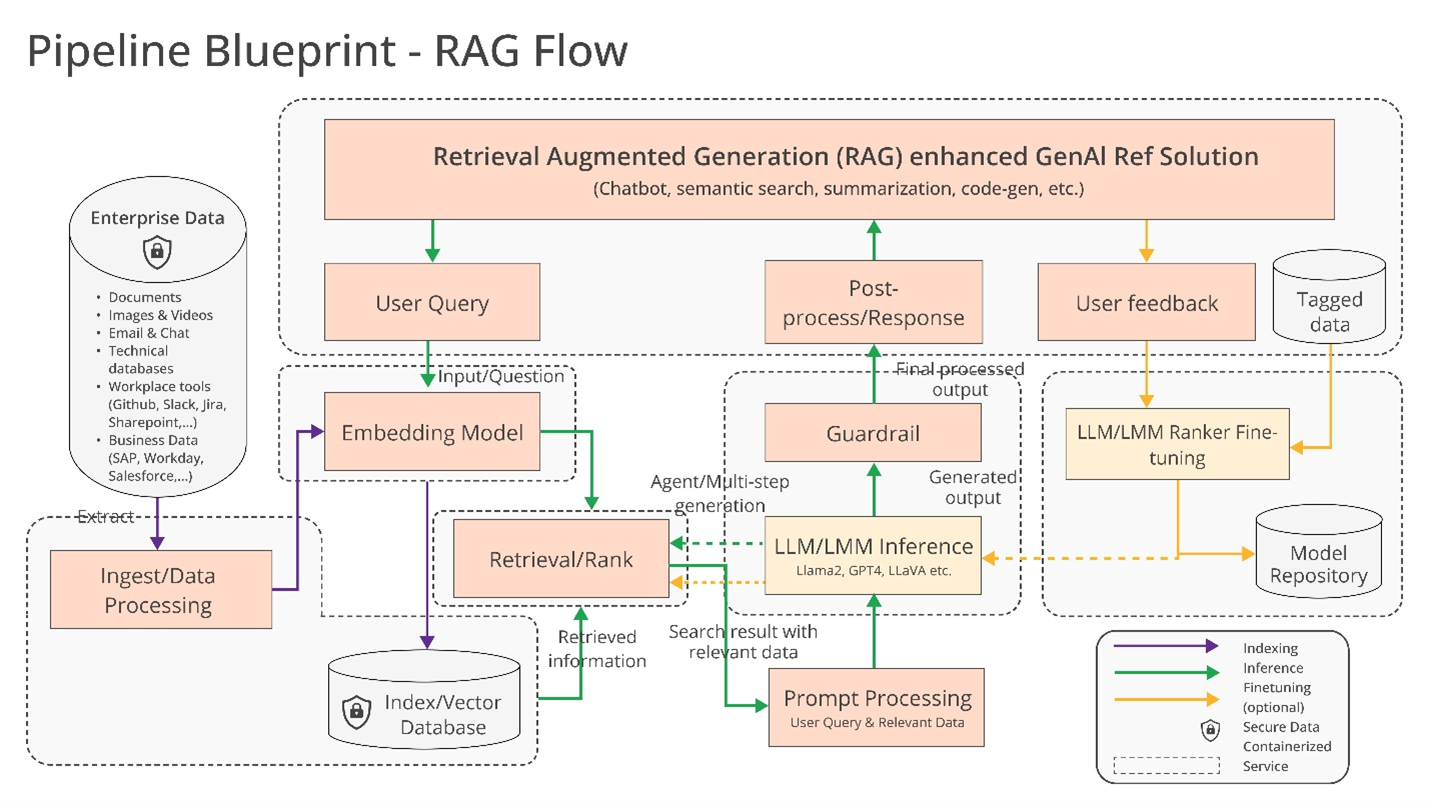

So what might they build together exactly? Haddad hints at a few possibilities, such as “optimized” support for AI toolchains and compilers, which enable AI workloads to run across different hardware components, as well as “heterogeneous” pipelines for retrieval-augmented generation (RAG).

RAG is becoming increasingly popular in enterprise applications of generative AI, and it’s not difficult to see why. Most generative AI models’ answers and actions are limited to the data on which they’re trained. But with RAG, a model’s knowledge base can be extended to info outside the original training data. RAG models reference this outside info — which can take the form of proprietary company data, a public database or some combination of the two — before generating a response or performing a task.

A diagram explaining RAG models. Image Credits: Intel

Intel offered a few more details in its own press release:

Enterprises are challenged with a do-it-yourself approach [to RAG] because there are no de facto standards across components that allow enterprises to choose and deploy RAG solutions that are open and interoperable and that help them quickly get to market. OPEA intends to address these issues by collaborating with the industry to standardize components, including frameworks, architecture blueprints and reference solutions.

Evaluation will also be a key part of what OPEA tackles.

In its GitHub repository, OPEA proposes a rubric for grading generative AI systems along four axes: performance, features, trustworthiness and “enterprise-grade” readiness. Performance as OPEA defines it pertains to “black-box” benchmarks from real-world use cases. Features is an appraisal of a system’s interoperability, deployment choices and ease of use. Trustworthiness looks at an AI model’s ability to guarantee “robustness” and quality. And enterprise readiness focuses on the requirements to get a system up and running sans major issues.

Rachel Roumeliotis, director of open source strategy at Intel, says that OPEA will work with the open source community to offer tests based on the rubric, as well as provide assessments and grading of generative AI deployments on request.

OPEA’s other endeavors are a bit up in the air at the moment. But Haddad floated the potential of open model development along the lines of Meta’s expanding Llama family and Databricks’ DBRX. Toward that end, in the OPEA repo, Intel has already contributed reference implementations for a generative-AI-powered chatbot, document summarizer and code generator optimized for its Xeon 6 and Gaudi 2 hardware.

Now, OPEA’s members are very clearly invested (and self-interested, for that matter) in building tooling for enterprise generative AI. Cloudera recently launched partnerships to create what it’s pitching as an “AI ecosystem” in the cloud. Domino offers a suite of apps for building and auditing business-forward generative AI. And VMware — oriented toward the infrastructure side of enterprise AI — last August rolled out new “private AI” compute products.

The question is whether these vendors will actually work together to build cross-compatible AI tools under OPEA.

There’s an obvious benefit to doing so. Customers will happily draw on multiple vendors depending on their needs, resources and budgets. But history has shown that it’s all too easy to become inclined toward vendor lock-in. Let’s hope that’s not the ultimate outcome here.

Powerful AI Assistant !Buff you with ChatGPT-4, Claude3

🚀 Exciting News! 🚀

🔥 Unlock the Power of Open Generative AI with Buffup.AI! 🔥

🌟 Are you ready to revolutionize your enterprise AI experience? The latest developments from Intel and the Linux Foundation have set the stage for groundbreaking advancements in generative AI tools for businesses. Now, imagine taking that innovation to the next level with Buffup.AI – your ultimate AI Copilot powered by ChatGPT-4, Claude3!

🔹 Introducing Buffup.AI – Your All-in-One AI Solution! 🔹

✨ **Powerful AI Chat:** With Buffup.AI, you can now ask any question and receive immediate solutions from our advanced GPT-4, Claude3 models. Get all the answers you need from our Buffup Bots, providing you with a comprehensive AI experience like never before!

🌐 **Webpage Reading Assistant:** Enhance your browsing experience with Buffup.AI’s Webpage Reading Assistant. Get accurate information, understand text better with the context menu, and bring AI closer to the content you’re exploring.

🚀 **AI Writing Assistant with 20x Speed:** Boost your writing capabilities with Buffup.AI’s AI Writing Assistant. Generate human-like articles and titles within seconds, enhance your writing styles, and get assistance in crafting email responses and social comments effortlessly!

🔓 **Free to Use, No Credit Card Required:** Experience the full potential of Buffup.AI without any financial commitments. Enjoy the freedom to create and share AI Bots with your friends, all while tapping into exceptional AI capabilities designed to make your workflow seamless and efficient.

🌟 Embrace the Future of AI with Buffup.AI! 🌟

🔗 Join the AI revolution today and elevate your enterprise AI game with Buffup.AI. Don’t miss out on the opportunity to integrate the power of AI directly into your workflow, simplifying complex tasks and maximizing productivity. Experience the difference with Buffup.AI – where innovation meets convenience! 🚀🤖